Comput. To investigate performance of the agent for another loading condition, the structure with the loads as shown in Figure 6A, denoted as loading condition L2, is also optimized using the same agent. The topology two steps before the terminal state contains successive V-shaped braces and is stable and statically determinate.

Comput. It is verified from the numerical examples that the trained agent acquired a policy to reduce total structural volume while satisfying the stress and displacement constraints. It is confirmed from this history that the agent successfully improves its policy to eliminate unnecessary members as the training proceeds.

Ohsaki, M. (1995). Solids Struct. Guo, X., Du, Z., Cheng, G., and Ni, C. (2013). The benchmark solutions for the 3 2-grid truss provided in Table 3 are global optimal solutions; this global optimality is verified through enumeration which took 44.1 h for each boundary condition. From these results, the agent is confirmed to behave well for a different loading condition. Yu, Y., Hur, T., and Jung, J. Human-level control through deep reinforcement learning. Comput. GA is one of the most prevalent metaheuristic approach for binary optimization problems, which is inspired by the process of natural selection (Mitchell, 1998). Deep learning for topology optimization design. saved after the 5,000-episode training is regarded as the best parameters. The proposed method for training agent is expected to become a supporting tool to instantly feedback the sub-optimal topology and enhance our design exploration. Optim. Genetic algorithm for topology optimization of trusses. Generative adversarial networks. Figure 8. Eng. In the same manner as neural networks, a back-propagation method (Rumelhart et al., 1986), which is a gradient based method to minimize the loss function, can be used for solving Equation (11). In utilizing the trained agent in Example 1, nload! Rev. relational embedding Note that the connection between the members in each pair is an unstable node, and must be fixed to generate a single long member. doi: 10.1016/0045-7949(94)00617-C, Ohsaki, M., and Hayashi, K. (2017). COURSERA: Neural Netw. 1, 419430. different removal sequences can be obtained for different order of the same set of load cases in node state data vk; for example, exchanging the values at indices 2 and 4 and those at indices 3 and 5 in vk maintains the original loading condition but may lead to different action to be taken during each member removal process, because the neural network outputs different Q values due to the exchange. 37, 377393. Arxiv:1801.05463. The upper-bound stress is 200 N/mm2 for both tension and compression for all examples.

Ohsaki, M. (1995). Solids Struct. Guo, X., Du, Z., Cheng, G., and Ni, C. (2013). The benchmark solutions for the 3 2-grid truss provided in Table 3 are global optimal solutions; this global optimality is verified through enumeration which took 44.1 h for each boundary condition. From these results, the agent is confirmed to behave well for a different loading condition. Yu, Y., Hur, T., and Jung, J. Human-level control through deep reinforcement learning. Comput. GA is one of the most prevalent metaheuristic approach for binary optimization problems, which is inspired by the process of natural selection (Mitchell, 1998). Deep learning for topology optimization design. saved after the 5,000-episode training is regarded as the best parameters. The proposed method for training agent is expected to become a supporting tool to instantly feedback the sub-optimal topology and enhance our design exploration. Optim. Genetic algorithm for topology optimization of trusses. Generative adversarial networks. Figure 8. Eng. In the same manner as neural networks, a back-propagation method (Rumelhart et al., 1986), which is a gradient based method to minimize the loss function, can be used for solving Equation (11). In utilizing the trained agent in Example 1, nload! Rev. relational embedding Note that the connection between the members in each pair is an unstable node, and must be fixed to generate a single long member. doi: 10.1016/0045-7949(94)00617-C, Ohsaki, M., and Hayashi, K. (2017). COURSERA: Neural Netw. 1, 419430. different removal sequences can be obtained for different order of the same set of load cases in node state data vk; for example, exchanging the values at indices 2 and 4 and those at indices 3 and 5 in vk maintains the original loading condition but may lead to different action to be taken during each member removal process, because the neural network outputs different Q values due to the exchange. 37, 377393. Arxiv:1801.05463. The upper-bound stress is 200 N/mm2 for both tension and compression for all examples.  Figure 6. doi: 10.1016/j.advengsoft.2019.04.002, He, K., Zhang, X., Ren, S., and Sun, J. Figure 9. Dorn, W. S. (1964). node2vec This way, features of each member considering connectivity can be extracted. doi: 10.1038/323533a0, Sheu, C. Y., and Schmit, L. A. Jr. (1972). Ringertz, U. T. (1986). likert embedding plotting metadata graphing

Figure 6. doi: 10.1016/j.advengsoft.2019.04.002, He, K., Zhang, X., Ren, S., and Sun, J. Figure 9. Dorn, W. S. (1964). node2vec This way, features of each member considering connectivity can be extracted. doi: 10.1038/323533a0, Sheu, C. Y., and Schmit, L. A. Jr. (1972). Ringertz, U. T. (1986). likert embedding plotting metadata graphing

Although the use of CNN-based convolution method is difficult to apply to trusses as they cannot be handled as pixel-wise data, the convolution is successfully implemented for trusses by introducing graph embedding, which has been extended in this paper from the standard node-based formulation to a member(edge)-based formulation. The datasets generated for this study are available on request to the corresponding author. Struct. In Equation (10), the action value is updated so as to minimize the difference between the sum of observed reward and estimated action value at the next state r(s)+maxaQ(s,a) and estimated action value at the previous state Q(s, a). Received: 22 November 2019; Accepted: 09 April 2020; Published: 30 April 2020. Furthermore, the robustness of the proposed method is also investigated by implementing 2,000-episode training using different random seeds for 20 times. Machine learning for combinatorial optimization of brace placement of steel frames. No use, distribution or reproduction is permitted which does not comply with these terms. Topological design of truss structures using simulated annealing. Struct. 72, 1528. The trainable parameters are optimized by a back-propagation method to minimize the loss function computed by estimated action value and observed reward. 25, 121129. If stress and displacement constraints are satisfied, penalty terms become zero and the cost function becomes equivalent to the total structural volume V(A). doi: 10.1038/nature24270, Sutton, R. S., and Barto, A. G. (1998). Figure 12. Build. doi: 10.1007/s00158-019-02214-w. Mitchell, M. (1998). The left two corners 1 and 7 are pin-supported and rightward and downward unit loads are separately applied at the bottom-right corner 43, as shown in Figure 11A in the loading condition L1. The agent trained in Example 1 is reused for a smaller 3 -grid truss without re-training. J. 29, 190197. Figure 5. Figure 4 plots the history of cumulative rewards in the test simulation recorded at every 10 episodes. Figure 11. RMSprop (Tieleman and Hinton, 2012) is adopted as the optimization method in this study. We use a PC with a CPU of Intel(R) Core(TM) i9-7900X @ 3.30GHz. Global optimization of truss topology with discrete bar areas-part ii: implementation and numerical results. These results imply that the proposed method is robust against randomness of boundary conditions and actions during the training. Achtziger, W., and Stolpe, M. (2009). Math. Similarly to loading condition L1 in Figure 5, several symmetric topologies are observed during the removal process, and the sub-optimal topology is a well-converged solution that does not contain unnecessary members. Methods Appl. arXiv:1702.05532. As Example 3, the agent is applied to a larger-scale truss, as shown in Figure 10, without re-training. The agent is trained and its performance is tested for a simple planar truss in section 4.1. Topping, B., Khan, A., and Leite, J. Even in this irregular case, the agent successfully obtained the sparse optimal solution, as shown in Figure 12. Training workflow utilizing RL and graph embedding. Consequently, the training concerns a total of 2 2 20 20 = 1, 600 combinations of support and loading conditions, and these combinations are almost equally simulated as long as the number of training episodes are sufficient. J. Mecan. The removal sequence of members is illustrated in Figure 6B. Comparison between proposed method (RL+GE) and GA in view of total structural volume V[m3] and CPU time for one optimization t[s] using benchmark solutions. The training method for tuning the parameters is described below. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). doi: 10.1016/0020-7683(94)00306-H, Hayashi, K., and Ohsaki, M. (2019). Introduction to Reinforcement Learning. Boundary condition B1 of Example 2; (A) initial GS, (B) removal sequence of members. combining embedding regression supervised rank representation sparse graph structure semi learning low Minimum weight design of elastic redundant trusses under multiple static loading conditions. Mach. 56, 11571168. Struct. neurips graphs learning machine medium hyperbolic embedding 2d space source tree In the first boundary condition B1, as shown in Figure 8A, left tip nodes 1 and 3 are pin-supported and bottom-right nodes 7 and 10 are subjected to downward unit load of 1 kN separately as different loading cases. informatics variational embedding residual autoencoders prediction nallbani 156, 309333. Cambridge, MA: MIT Press. Multidiscip. embedding Topologies at steps 37, 60, 84, 100, 144, and 145 in the removal sequence are illustrated in Figure 11B. Although nload! Furthermore, the trained agent is applicable to a truss with different topology, geometry and loading and boundary conditions after it is trained for a specific truss with various loading and boundary conditions. Appl. Methods Appl. doi: 10.1007/s00158-012-0877-2, Hagishita, T., and Ohsaki, M. (2009). Load and support conditions are randomly provided according to a rule so that the agent can be trained to have good performance for various boundary conditions. doi: 10.1007/s00158-017-1710-8, Ohsaki, M., and Katoh, N. (2005). IEEE Trans. Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. doi: 10.1109/TNN.1998.712192, Tamura, T., Ohsaki, M., and Takagi, J. (1996). Therefore, the topology just before the terminal state shall be a sub-optimal topology, which is a truss with 12 members as shown in Figure 5B. 10, 155162. Optim. Arch. 1, NIPS'12 (Tahoe, CA: Curran Associates Inc.), 10971105. Because the optimization problem (Equation 3) contains constraint functions, the cost function F used in GA is defined using the penalty term as: where 1 and 2 are penalty coefficients for stress and displacement constraints; both are set to be 1000 in this study.

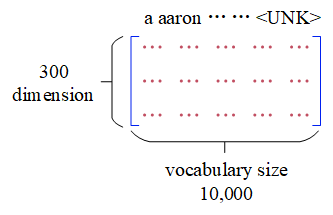

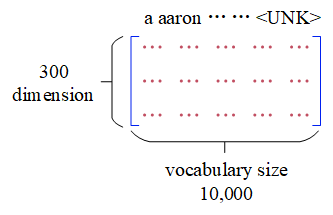

Background information of deep learning for structural engineering. In the optimization with RMSprop, 32 datasets out of the stored transitions are randomly chosen to create a minibatch and the set of trainable parameters is updated based on the mean squared error of the loss function of each dataset computed by the right-hand side of Equation (11). Keywords: topology optimization, binary-type approach, machine learning, reinforcement learning, graph embedding, truss, stress and displacement constraints, Citation: Hayashi K and Ohsaki M (2020) Reinforcement Learning and Graph Embedding for Binary Truss Topology Optimization Under Stress and Displacement Constraints. The parameters are tuned using a method based on 1-step Q-learning method, which is a frequently used RL method. It is also advantageous that the agent is easily replicated and available in other computers by importing the trained parameters. doi: 10.1109/CVPR.2016.90, Khandelwal, M. (2011). (1997). embedding In addition, the accuracy of the trained agent is less dependent on the size of the problem; the trained agent reached presumable global optimum for 10 10-grid truss with L1 loading condition, although the agent was caught at the local optimum for 8 8-grid truss with the same loading condition, and even for 3 2-grid truss with B1 boundary condition. Two pin-supports are randomly chosen; one from nodes 1 and 2 and the other from nodes 4 and 5. nodes embedding Figure 4. Eng. pytorch nodes pbg embedding billions starship Indiana Univ. Comput. Loading condition L2 of Example 3; (A) initial GS, (B) removal sequence of members. Q-learning. History of cumulative reward of each test measured every 10 episodes. 10, 111124. Int. Figure 3. The GS consists of 6 6 grids and the number of members is more than twice of the 4 4-grid truss. Softw. The cumulative reward until terminal state is recorded using the greedy policy without randomness (i.e., -greedy policy with = 0) during the test.

Comput. It is verified from the numerical examples that the trained agent acquired a policy to reduce total structural volume while satisfying the stress and displacement constraints. It is confirmed from this history that the agent successfully improves its policy to eliminate unnecessary members as the training proceeds.

Ohsaki, M. (1995). Solids Struct. Guo, X., Du, Z., Cheng, G., and Ni, C. (2013). The benchmark solutions for the 3 2-grid truss provided in Table 3 are global optimal solutions; this global optimality is verified through enumeration which took 44.1 h for each boundary condition. From these results, the agent is confirmed to behave well for a different loading condition. Yu, Y., Hur, T., and Jung, J. Human-level control through deep reinforcement learning. Comput. GA is one of the most prevalent metaheuristic approach for binary optimization problems, which is inspired by the process of natural selection (Mitchell, 1998). Deep learning for topology optimization design. saved after the 5,000-episode training is regarded as the best parameters. The proposed method for training agent is expected to become a supporting tool to instantly feedback the sub-optimal topology and enhance our design exploration. Optim. Genetic algorithm for topology optimization of trusses. Generative adversarial networks. Figure 8. Eng. In the same manner as neural networks, a back-propagation method (Rumelhart et al., 1986), which is a gradient based method to minimize the loss function, can be used for solving Equation (11). In utilizing the trained agent in Example 1, nload! Rev. relational embedding Note that the connection between the members in each pair is an unstable node, and must be fixed to generate a single long member. doi: 10.1016/0045-7949(94)00617-C, Ohsaki, M., and Hayashi, K. (2017). COURSERA: Neural Netw. 1, 419430. different removal sequences can be obtained for different order of the same set of load cases in node state data vk; for example, exchanging the values at indices 2 and 4 and those at indices 3 and 5 in vk maintains the original loading condition but may lead to different action to be taken during each member removal process, because the neural network outputs different Q values due to the exchange. 37, 377393. Arxiv:1801.05463. The upper-bound stress is 200 N/mm2 for both tension and compression for all examples.

Ohsaki, M. (1995). Solids Struct. Guo, X., Du, Z., Cheng, G., and Ni, C. (2013). The benchmark solutions for the 3 2-grid truss provided in Table 3 are global optimal solutions; this global optimality is verified through enumeration which took 44.1 h for each boundary condition. From these results, the agent is confirmed to behave well for a different loading condition. Yu, Y., Hur, T., and Jung, J. Human-level control through deep reinforcement learning. Comput. GA is one of the most prevalent metaheuristic approach for binary optimization problems, which is inspired by the process of natural selection (Mitchell, 1998). Deep learning for topology optimization design. saved after the 5,000-episode training is regarded as the best parameters. The proposed method for training agent is expected to become a supporting tool to instantly feedback the sub-optimal topology and enhance our design exploration. Optim. Genetic algorithm for topology optimization of trusses. Generative adversarial networks. Figure 8. Eng. In the same manner as neural networks, a back-propagation method (Rumelhart et al., 1986), which is a gradient based method to minimize the loss function, can be used for solving Equation (11). In utilizing the trained agent in Example 1, nload! Rev. relational embedding Note that the connection between the members in each pair is an unstable node, and must be fixed to generate a single long member. doi: 10.1016/0045-7949(94)00617-C, Ohsaki, M., and Hayashi, K. (2017). COURSERA: Neural Netw. 1, 419430. different removal sequences can be obtained for different order of the same set of load cases in node state data vk; for example, exchanging the values at indices 2 and 4 and those at indices 3 and 5 in vk maintains the original loading condition but may lead to different action to be taken during each member removal process, because the neural network outputs different Q values due to the exchange. 37, 377393. Arxiv:1801.05463. The upper-bound stress is 200 N/mm2 for both tension and compression for all examples.  Figure 6. doi: 10.1016/j.advengsoft.2019.04.002, He, K., Zhang, X., Ren, S., and Sun, J. Figure 9. Dorn, W. S. (1964). node2vec This way, features of each member considering connectivity can be extracted. doi: 10.1038/323533a0, Sheu, C. Y., and Schmit, L. A. Jr. (1972). Ringertz, U. T. (1986). likert embedding plotting metadata graphing

Figure 6. doi: 10.1016/j.advengsoft.2019.04.002, He, K., Zhang, X., Ren, S., and Sun, J. Figure 9. Dorn, W. S. (1964). node2vec This way, features of each member considering connectivity can be extracted. doi: 10.1038/323533a0, Sheu, C. Y., and Schmit, L. A. Jr. (1972). Ringertz, U. T. (1986). likert embedding plotting metadata graphing Although the use of CNN-based convolution method is difficult to apply to trusses as they cannot be handled as pixel-wise data, the convolution is successfully implemented for trusses by introducing graph embedding, which has been extended in this paper from the standard node-based formulation to a member(edge)-based formulation. The datasets generated for this study are available on request to the corresponding author. Struct. In Equation (10), the action value is updated so as to minimize the difference between the sum of observed reward and estimated action value at the next state r(s)+maxaQ(s,a) and estimated action value at the previous state Q(s, a). Received: 22 November 2019; Accepted: 09 April 2020; Published: 30 April 2020. Furthermore, the robustness of the proposed method is also investigated by implementing 2,000-episode training using different random seeds for 20 times. Machine learning for combinatorial optimization of brace placement of steel frames. No use, distribution or reproduction is permitted which does not comply with these terms. Topological design of truss structures using simulated annealing. Struct. 72, 1528. The trainable parameters are optimized by a back-propagation method to minimize the loss function computed by estimated action value and observed reward. 25, 121129. If stress and displacement constraints are satisfied, penalty terms become zero and the cost function becomes equivalent to the total structural volume V(A). doi: 10.1038/nature24270, Sutton, R. S., and Barto, A. G. (1998). Figure 12. Build. doi: 10.1007/s00158-019-02214-w. Mitchell, M. (1998). The left two corners 1 and 7 are pin-supported and rightward and downward unit loads are separately applied at the bottom-right corner 43, as shown in Figure 11A in the loading condition L1. The agent trained in Example 1 is reused for a smaller 3 -grid truss without re-training. J. 29, 190197. Figure 5. Figure 4 plots the history of cumulative rewards in the test simulation recorded at every 10 episodes. Figure 11. RMSprop (Tieleman and Hinton, 2012) is adopted as the optimization method in this study. We use a PC with a CPU of Intel(R) Core(TM) i9-7900X @ 3.30GHz. Global optimization of truss topology with discrete bar areas-part ii: implementation and numerical results. These results imply that the proposed method is robust against randomness of boundary conditions and actions during the training. Achtziger, W., and Stolpe, M. (2009). Math. Similarly to loading condition L1 in Figure 5, several symmetric topologies are observed during the removal process, and the sub-optimal topology is a well-converged solution that does not contain unnecessary members. Methods Appl. arXiv:1702.05532. As Example 3, the agent is applied to a larger-scale truss, as shown in Figure 10, without re-training. The agent is trained and its performance is tested for a simple planar truss in section 4.1. Topping, B., Khan, A., and Leite, J. Even in this irregular case, the agent successfully obtained the sparse optimal solution, as shown in Figure 12. Training workflow utilizing RL and graph embedding. Consequently, the training concerns a total of 2 2 20 20 = 1, 600 combinations of support and loading conditions, and these combinations are almost equally simulated as long as the number of training episodes are sufficient. J. Mecan. The removal sequence of members is illustrated in Figure 6B. Comparison between proposed method (RL+GE) and GA in view of total structural volume V[m3] and CPU time for one optimization t[s] using benchmark solutions. The training method for tuning the parameters is described below. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). doi: 10.1016/0020-7683(94)00306-H, Hayashi, K., and Ohsaki, M. (2019). Introduction to Reinforcement Learning. Boundary condition B1 of Example 2; (A) initial GS, (B) removal sequence of members. combining embedding regression supervised rank representation sparse graph structure semi learning low Minimum weight design of elastic redundant trusses under multiple static loading conditions. Mach. 56, 11571168. Struct. neurips graphs learning machine medium hyperbolic embedding 2d space source tree In the first boundary condition B1, as shown in Figure 8A, left tip nodes 1 and 3 are pin-supported and bottom-right nodes 7 and 10 are subjected to downward unit load of 1 kN separately as different loading cases. informatics variational embedding residual autoencoders prediction nallbani 156, 309333. Cambridge, MA: MIT Press. Multidiscip. embedding Topologies at steps 37, 60, 84, 100, 144, and 145 in the removal sequence are illustrated in Figure 11B. Although nload! Furthermore, the trained agent is applicable to a truss with different topology, geometry and loading and boundary conditions after it is trained for a specific truss with various loading and boundary conditions. Appl. Methods Appl. doi: 10.1007/s00158-012-0877-2, Hagishita, T., and Ohsaki, M. (2009). Load and support conditions are randomly provided according to a rule so that the agent can be trained to have good performance for various boundary conditions. doi: 10.1007/s00158-017-1710-8, Ohsaki, M., and Katoh, N. (2005). IEEE Trans. Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. doi: 10.1109/TNN.1998.712192, Tamura, T., Ohsaki, M., and Takagi, J. (1996). Therefore, the topology just before the terminal state shall be a sub-optimal topology, which is a truss with 12 members as shown in Figure 5B. 10, 155162. Optim. Arch. 1, NIPS'12 (Tahoe, CA: Curran Associates Inc.), 10971105. Because the optimization problem (Equation 3) contains constraint functions, the cost function F used in GA is defined using the penalty term as: where 1 and 2 are penalty coefficients for stress and displacement constraints; both are set to be 1000 in this study.

Background information of deep learning for structural engineering. In the optimization with RMSprop, 32 datasets out of the stored transitions are randomly chosen to create a minibatch and the set of trainable parameters is updated based on the mean squared error of the loss function of each dataset computed by the right-hand side of Equation (11). Keywords: topology optimization, binary-type approach, machine learning, reinforcement learning, graph embedding, truss, stress and displacement constraints, Citation: Hayashi K and Ohsaki M (2020) Reinforcement Learning and Graph Embedding for Binary Truss Topology Optimization Under Stress and Displacement Constraints. The parameters are tuned using a method based on 1-step Q-learning method, which is a frequently used RL method. It is also advantageous that the agent is easily replicated and available in other computers by importing the trained parameters. doi: 10.1109/CVPR.2016.90, Khandelwal, M. (2011). (1997). embedding In addition, the accuracy of the trained agent is less dependent on the size of the problem; the trained agent reached presumable global optimum for 10 10-grid truss with L1 loading condition, although the agent was caught at the local optimum for 8 8-grid truss with the same loading condition, and even for 3 2-grid truss with B1 boundary condition. Two pin-supports are randomly chosen; one from nodes 1 and 2 and the other from nodes 4 and 5. nodes embedding Figure 4. Eng. pytorch nodes pbg embedding billions starship Indiana Univ. Comput. Loading condition L2 of Example 3; (A) initial GS, (B) removal sequence of members. Q-learning. History of cumulative reward of each test measured every 10 episodes. 10, 111124. Int. Figure 3. The GS consists of 6 6 grids and the number of members is more than twice of the 4 4-grid truss. Softw. The cumulative reward until terminal state is recorded using the greedy policy without randomness (i.e., -greedy policy with = 0) during the test.